Deep learning – a type of neural network architecture that emerged a few years ago – has been getting a lot of attention lately, with Google, Facebook, and Microsoft committing an ever-increasing number of their projects to using it in their quest of intelligent computing. At DiUS, we are experimenting with deep learning to explore the value it can add to our core competencies of software and hardware development.

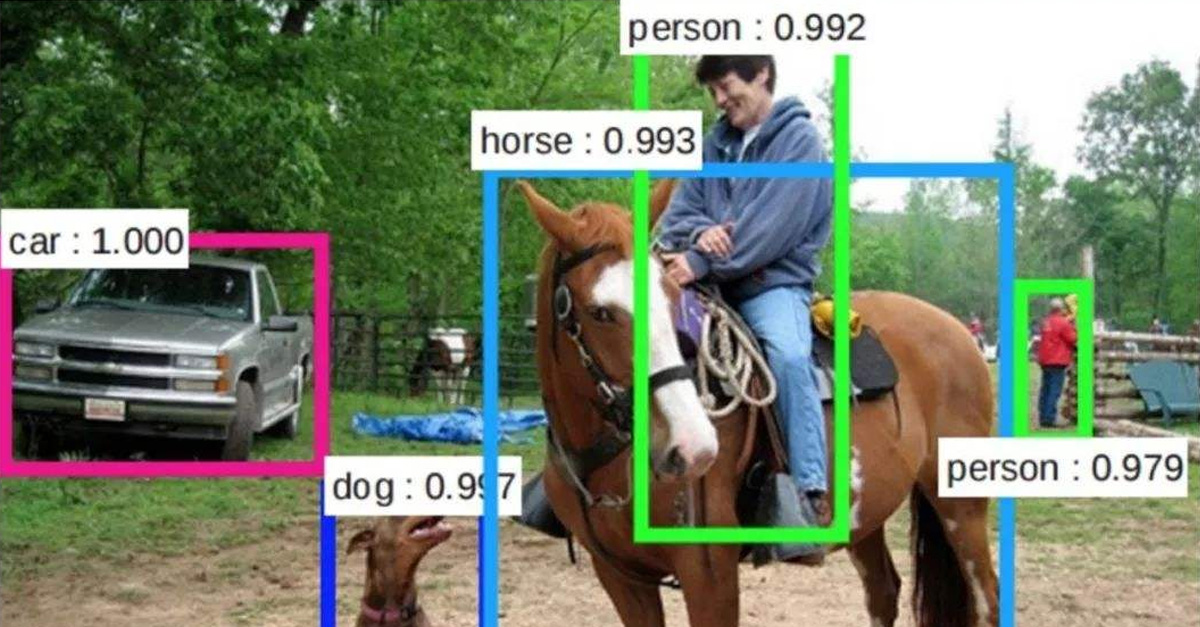

The numbers following the object names indicate the model’s confidence in its classification of the object. Source: Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun, Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks, arXiv:1506.01497

My personal interest in deep learning goes back 25 years, to my Masters degree research of neural networks and their application to unsupervised speech recognition. Using computational structures that mimic the brain – even somewhat simplistically as neural networks do – has always interested me. I hoped (and still do) that one day it could lead to artificial intelligence. My interest was renewed recently when I wanted to automate the analysis of aerial imagery from my drone work in precision agriculture at DiUS; the major advances made by researchers using deep neural networks for a variety of difficult image processing tasks was very impressive. I could also see how we could apply it at DiUS to customer data and make our applications more intelligent.

The sophistication and ability of neural networks has grown significantly over the last 25 years. Instead of the traditional approach where each node in a layer was connected to each node in one or two preceding and succeeding layers (also known as a fully connected network), researchers have developed new types of specialised layers and combined many of them to form networks tens or sometimes hundreds of layers deep. This approach has yielded image recognition accuracy that now surpasses human performance.

A deep neural network such as this one from Google, named Inception, is an example of a Convolutional Neural Network (CNN). It employs many specialised layers that learn important features with increasing complexity as the data moves through the network from left to right.

The two main enablers for this advance are that researchers have more open-sourced well-labelled data with which to train, and they have faster and cheaper GPUs (Graphics Processing Units) for their experiments.

The data effect

The availability of large well-labelled data sets, combined with ease of finding them, has given machine learning in general a big push, and this is particularly true for deep neural networks. Some of the most commonly benchmarked data sets for comparing the effectiveness of models are:

- ImageNet – a database of images with the same hierarchical organisation of the nouns in WordNet, a lexical database for English. ImageNet has over 14,000,000 labelled images.

- MNIST – a collection of handwritten digits sampled from US Census Bureau employees and secondary school students. It has a training set of 60,000 examples, and a test set of 10,000 examples.

- CIFAR10 – a collection of 60,000 colour images, each 32×32 pixels, assigned to 10 label classes, such as airplane, automobile, bird, etc. The images are small to enable faster training times and experimentation.

The GPU effect

Training a deep neural network on a GPU is at least ten times faster than on a CPU. There are two main reasons:

- GPUs are optimised for matrix multiplication, the computational basis for generating and transforming 3D models, and creating lighting effects. Neural networks use a lot of matrix multiplication as well.

- GPUs have a much larger number of simple cores than a CPU. A modern CPU such as an Intel i7 6900K has 8 complex cores. A recent GPU card such as the NVIDIA GTX 1080 has 2560 cores, though each core specialises in performing many simple operations in parallel. This feature is well-aligned with the computation required in neural networks.

One of the most interesting things about using deep neural networks for image processing is how it differs from feature engineering, the more common approach to image understanding:

- Feature engineering involves understanding the important features to look for in order to classify an image, detect objects, and so on. Demanding tasks usually required combining several common algorithms in a series of steps, or hand-crafting an algorithm.

- Neural networks on the other hand are able to identify the key features without supervision, by rewarding or penalising nodes and their weights that lead to a lower or higher error. Through large numbers of forward and backward passes, making small adjustments to the node weights on each pass, a network is able to learn how to map the output from the input. Thus, a neural network researcher needs to understand the shape of the data, but may not know in advance what are the important features to be extracted. This makes neural networks more flexible than feature engineering in finding features in the data that are not obvious or apparent from human analysis, perhaps due to the complexity of the data or its volume.

Deep neural networks are capable of learning patterns in both sequences (such as time series data, text, sound, video frames) and 2D space (such as images). Here are some examples of things these networks can do:

Detect objects in an image

Deep neural networks are good at detecting objects in an image, such as these vehicles in an image taken from a drone flying overhead. Note the model handles vehicle rotation and occlusion. Source: NVIDIA.

An extract of a 3,200 x 415,000 pixel false-colour image created from the output of a mass spectrometer, showing detection of protein isotope peaks. Knowing where the peaks occur helps biochemists identify biomarkers that are used, for example, to determine a patient’s propensity to respond well to certain drug treatments.

Describe a scene

Deep neural networks can identify the main objects in a scene, and then form a complete sentence that describes what is happening in the scene. Source: Andrej Karpathy, Li Fei-Fei, Deep Visual-Semantic Alignments for Generating Image Description, CVPR, 2015

Classify an image, text, sound

In a recent proof of concept at DiUS, we trained an Inception model to classify paintings from the Kaggle paintings dataset, a collection of nearly 80,000 paintings by 1,600 artists. One of the questions we posed the model was “what is the style of this painting?”.

These paintings were correctly classified to be in the Realism style.

These paintings were correctly classified to be in the Pointillism style.

We then asked the model “which artist created this painting?”. The Kaggle dataset does not identify the artists’ names – only identifying them a unique ID – but the model correctly identified that the paintings were created by the same artist. A subsequent check with the reverse image search engine Tin Eye revealed the artist to be John Trumbull.

Our trained deep neural network correctly identified these paintings to be created by the American Revolutionary War artist John Trumbull.

Generate an image, text, sound in a trained style.

For example, sonnets in the style of Shakespeare, or paintings in the style of Monet.

A type of deep neural network called a Recurrent Neural Network (RNN), designed to learn and then predict sequences, or generate new sequences, can be trained on a style of text (i.e. a sequence of characters) and can generate new sentences. This has been applied to Wikipedia articles, and the works of Tolstoy and Shakespeare.

MARK ANTONY:

I might have stay’d, and I met your triumphant cousin;

And in the world,

Whose parties, that it were two bodies, to discharge

A glumber to a perfect tower.KING HENRY V:

And I must not see her, but go’st with unhappy woe.

Some selected works of Shakespeare were fed into an RNN, and the model generated this new text in the learned style. The model has learned from sequences of characters, and can generate correct actor names, valid words, spaces and punctuation. Source: The Unreasonable Effectiveness of Recurrent Neural Networks.

Predict the next step in a sequence of events

Deep neural networks can learn arbitrary functions that map from an input to a desired output. To experiment with this ability, in a DiUS experiment we tried something simple: teach a deep neural network the sine(x) function. The model learned ten cycles of sine(x), then we gave it a value and asked it to predict the next value 20 steps into the future. The potential application of this ability is to predict business events, such as point-of-sale events, or web site traffic.

Using a deep neural network to predict time series data. The model was trained on a sine function (shown in red) and was then asked to predict a value 20 steps into the future (shown in green). A perfect prediction of the future would mean the two lines are exactly aligned, which they nearly are.*

What it means for business

Applications for deep learning really depend on the amount and type of data available, but deep neural networks are very flexible and ideally suited to tasks that are difficult for humans, due to the difficulty of finding patterns in large amounts of data. These tasks include:

- Anticipating a customer’s needs and preferences from their previous behaviour.

- Determining sentiment toward a brand appearing in text, images, and video on social media.

- Predicting the level of social media engagement by analysing the content of a press release.

- Automating metadata tagging of unstructured collateral, such as video and datasets.

- Agents or assistants collaborating with a human user rather than being their servants.

- Classifying business events from trends in data, and predicting trends.